Quick Introduction

DeepEval is an open-source evaluation framework for Python, that makes it easy to build and iterate on LLM applications with the following principles in mind:

- Easily "unit test" LLM outputs in a similar way to Pytest.

- Leverage various out-of-the-box LLM-evaluated and classic evaluation metrics.

- Define evaluation datasets in Python code.

- Metrics are simple to customize.

- [alpha] Bring evaluation into production using Python decorators.

Setup A Python Environement

Go to the root directory of your project and create a virtual environement (if you don't already have one). In the CLI, run:

python3 -m venv venv

source venv/bin/activate

Installation

In your newly created virtual environement, run:

pip install -U deepeval

You can also keep track of all evaluation results by logging into our in all one evaluation platform, and use Confident AI's proprietary LLM evaluation agent for evaluation:

deepeval login

Contact us if you're dealing with sensitive data that has to reside in your private VPCs.

Create Your First Test Case

Run touch test_example.py to create a test file in your root directory. Open test_example.py and paste in your first test case:

import pytest

from deepeval import assert_test

from deepeval.metrics import HallucinationMetric

from deepeval.test_case import LLMTestCase

def test_hallucination():

input = "What if these shoes don't fit?"

context = ["All customers are eligible for a 30 day full refund at no extra cost."]

# Replace this with the actual output of your LLM application

actual_output = "We offer a 30-day full refund at no extra cost."

hallucination_metric = HallucinationMetric(threshold=0.7)

test_case = LLMTestCase(input=input, actual_output=actual_output, context=context)

assert_test(test_case, [hallucination_metric])

Run deepeval test run from the root directory of your project:

deepeval test run test_example.py

Congratulations! Your test case should have passed ✅ Let's breakdown what happened.

- The variable

inputmimics a user input, andactual_outputis a placeholder for what your application's supposed to output based on this input. - The variable

contextcontains the relevant information from your knowledge base, andHallucinationMetric(threshold=0.7)is an default metric provided by DeepEval for you to evaluate how factually correct your application's output is based on the provided context. - All metric scores range from 0 - 1, which the

threshold=0.7threshold ultimately determines if your test have passed or not.

deepeval's default metrics are not evaluated using LLMs. Keep reading this tutorial to learn how to create an LLM based evaluation metric.

To save the test results locally for each test run, set the DEEPEVAL_RESULTS_FOLDER environement variable to your relative path of choice:

export DEEPEVAL_RESULTS_FOLDER="./data"

Create Your First Custom Metric

deepeval provides two types of custom metric to evaluate LLM outputs: metrics evaluated with LLMs and metrics evaluated without LLMs. Here is a brief overview of each custom metric.

LLM Evaluated Metrics

An LLM evaluated metric, is one where evaluation is carried out by an LLM. Here's how you can create a custom, LLM evaluated metric.

from deepeval import assert_test

from deepeval.metrics import LLMEvalMetric

from deepeval.test_case import LLMTestCase, LLMTestCaseParams

...

def test_summarization():

input = "What if these shoes don't fit? I want a full refund."

# Replace this with the actual output from your LLM application

actual_output = "If the shoes don't fit, the customer wants a full refund."

summarization_metric = LLMEvalMetric(

name="Summarization",

criteria="Summarization - determine if the actual output is an accurate and concise summarization of the input.",

evaluation_params=[LLMTestCaseParams.INPUT, LLMTestCaseParams.ACTUAL_OUTPUT],

threshold=0.5

)

test_case = LLMTestCase(input=input, actual_output=actual_output)

assert_test(test_case, [summarization_metric])

Classic Metrics

A classic metric is a metric where evluation is not carried out by another LLM. You can define a custom classic metric by defining the measure and is_successful methods upon inheriting the base Metric class. Paste in the following:

from deepeval.metrics import BaseMetric

...

class LengthMetric(BaseMetric):

# This metric checks if the output length is greater than 10 characters

def __init__(self, max_length: int=10):

self.threshold = max_length

def measure(self, test_case: LLMTestCase):

self.success = len(test_case.actual_output) > self.threshold

if self.success:

score = 1

else:

score = 0

return score

def is_successful(self):

return self.success

@property

def __name__(self):

return "Length"

def test_length():

input = "What if these shoes don't fit?"

# Replace this with the actual output of your LLM application

actual_output = "We offer a 30-day full refund at no extra cost."

length_metric = LengthMetric(max_length=10)

test_case = LLMTestCase(input=input, actual_output=actual_output)

assert_test(test_case, [length_metric])

Run deepeval test run from the root directory of your project again:

deepeval test run test_example.py

You should see both test_hallucination and test_length passing.

Two things to note:

- Custom metrics does requires a

mimimum_scoreas a passing criteria. In the case of ourLengthMetric, the passing criteria was whether themax_lengthofactual_outputis greater than 10. - We removed

contextintest_lengthsince it was irrelevant to evaluating output length. All you need isinputandactual_outputto create a validLLMTestCase.

Combine Your Metrics

You might've noticed we have duplicated test cases for both test_hallucination and test_length (ie. they have the same input and expected output). To avoid this redundancy, deepeval offers an easy way to apply as many metrics as you wish on a single test case.

...

def test_everything():

input = "What if these shoes don't fit?"

context = ["All customers are eligible for a 30 day full refund at no extra cost."]

# Replace this with the actual output of your LLM application

actual_output = "We offer a 30-day full refund at no extra cost."

hallucination_metric = HallucinationMetric(threshold=0.7)

length_metric = LengthMetric(max_length=10)

summarization_metric = LLMEvalMetric(

name="Summarization",

criteria="Summarization - determine if the actual output is an accurate and concise summarization of the input.",

evaluation_params=[LLMTestCaseParams.INPUT, LLMTestCaseParams.ACTUAL_OUTPUT],

threshold=0.5

)

test_case = LLMTestCase(input=input, actual_output=actual_output, context=context)

assert_test(test_case, [hallucination_metric, length_metric, summarization_metric])

In this scenario, test_everything only passes if all metrics are passing. Run deepeval test run again to see the results:

deepeval test run test_example.py

Evaluate Your Dataset in Bulk

First, parse your evaluation dataset into the following format:

class DataPoint:

input: str

expected_output: Optional[str]

context: Optional[List[str]]

class Dataset:

data_points: List[DataPoint]

Utilize the @pytest.mark.parametrize decorator to loop through and evaluate your entire evaluation dataset.

import pytest

from deepeval import assert_test

from deepeval.metrics import HallucinationMetric

from deepeval.test_case import LLMTestCase

dataset = [

{

"input": "What are your operating hours?",

"context": [

"Our company operates from 10 AM to 6 PM, Monday to Friday.",

"We are closed on weekends and public holidays.",

"Our customer service is available 24/7.",

],

},

{

"input": "Do you offer free shipping?",

"expected_output": "Yes, we offer free shipping on orders over $50.",

"context": [

"We offer free shipping on all orders over $50.",

],

},

{

"input": "What is your return policy?",

"context": [

"Free returns within the first 30 days of purchase."

],

},

]

@pytest.mark.parametrize(

"test_case",

dataset,

)

def test_hallucination(test_case: dict):

input = test_case.get("input")

expected_output = test_case.get("expected_output")

context = test_case.get("context")

# Replace this with the actual output of your LLM application

actual_output = "We offer a 30-day full refund at no extra cost."

hallucination_metric = HallucinationMetric(threshold=0.7)

test_case = LLMTestCase(

input=input,

actual_output=actual_output,

expected_output=expected_output,

context=context

)

assert_test(test_case, [hallucination_metric])

To run test cases at once in parallel, use the optional -n flag followed by a number (that determines the number of processes that will be used) when executing deepeval test run:

deepeval test run test_bulk.py -n 2

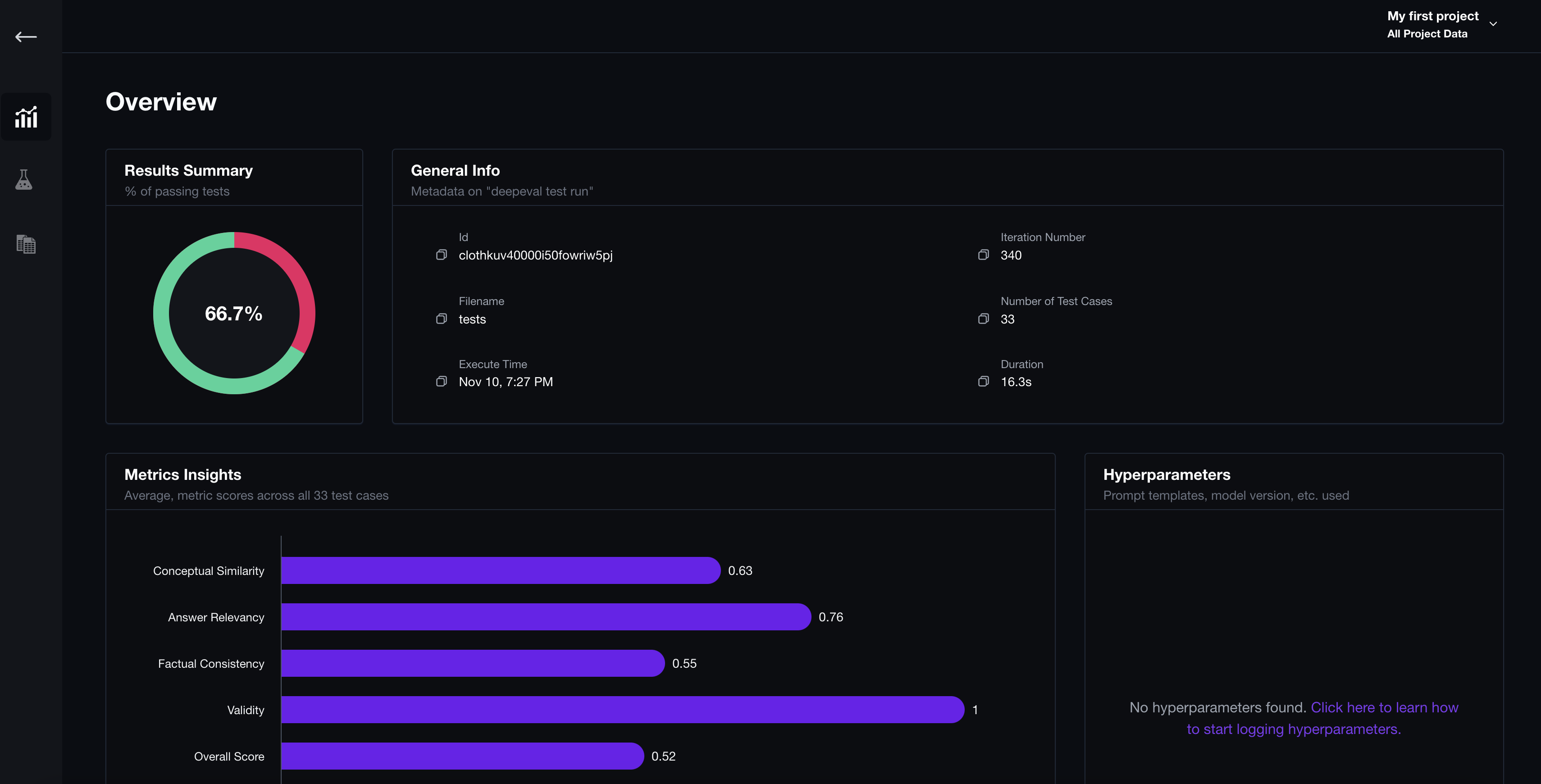

Visualize Your Results

If you have reached this point, you've likely ran deepeval test run multiple times. To keep track of all future evaluation results created by deepeval, login to Confident AI by running the following command:

deepeval login

Confident AI is the platform powering deepeval, and offer deep insights to help you quickly figure out how to best implement your LLM application. Follow the instructions displayed on the CLI to create an account, get your Confident API key, and paste it in the CLI.

Once you've pasted your Confident API key in the CLI, run:

deepeval test run test_example.py

View Test Run

You should now see a link being returned upon test completion. Paste it in your browser to view results.

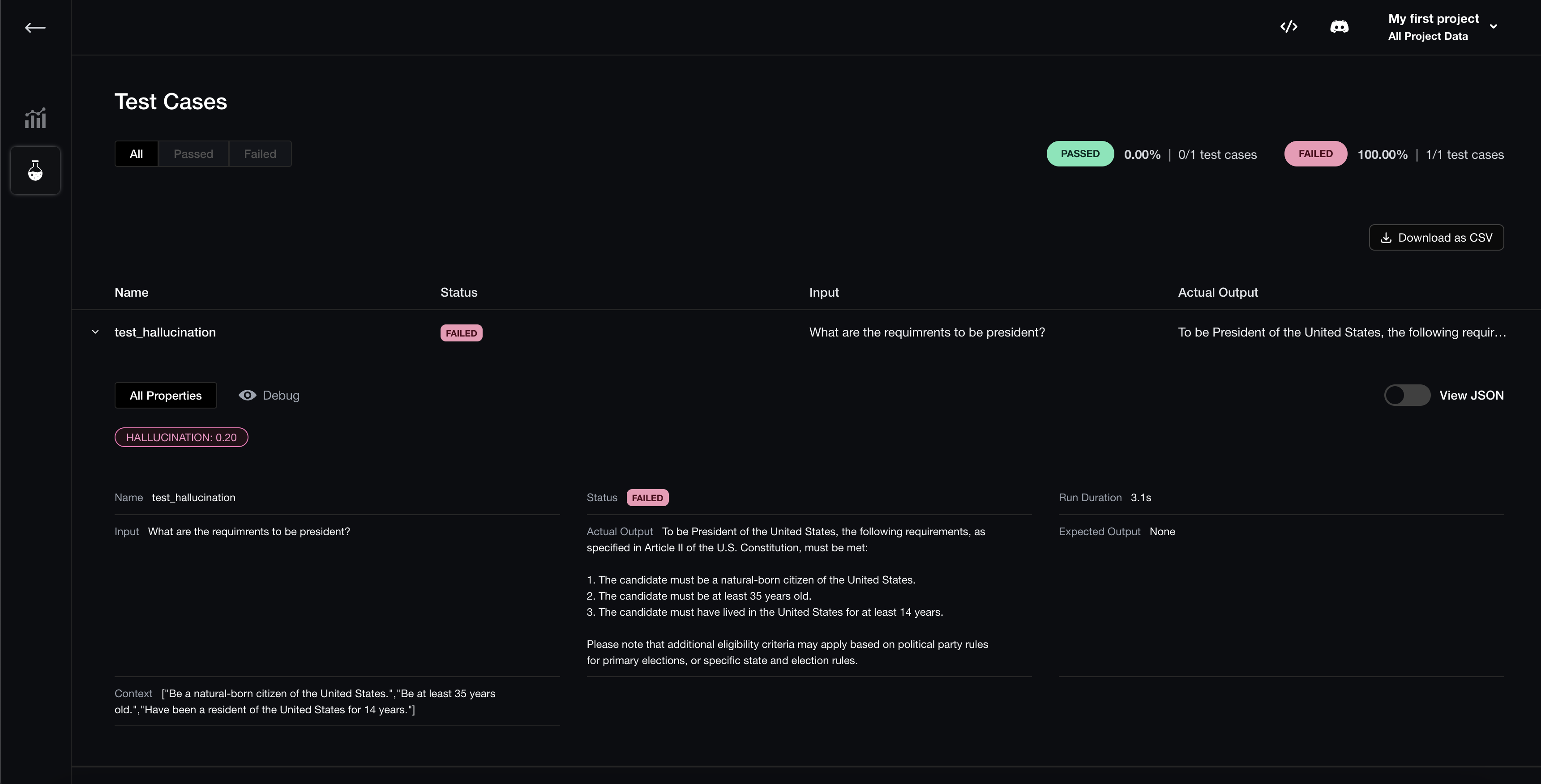

View Individual Test Cases

You can also view individual test cases for enhanced debugging:

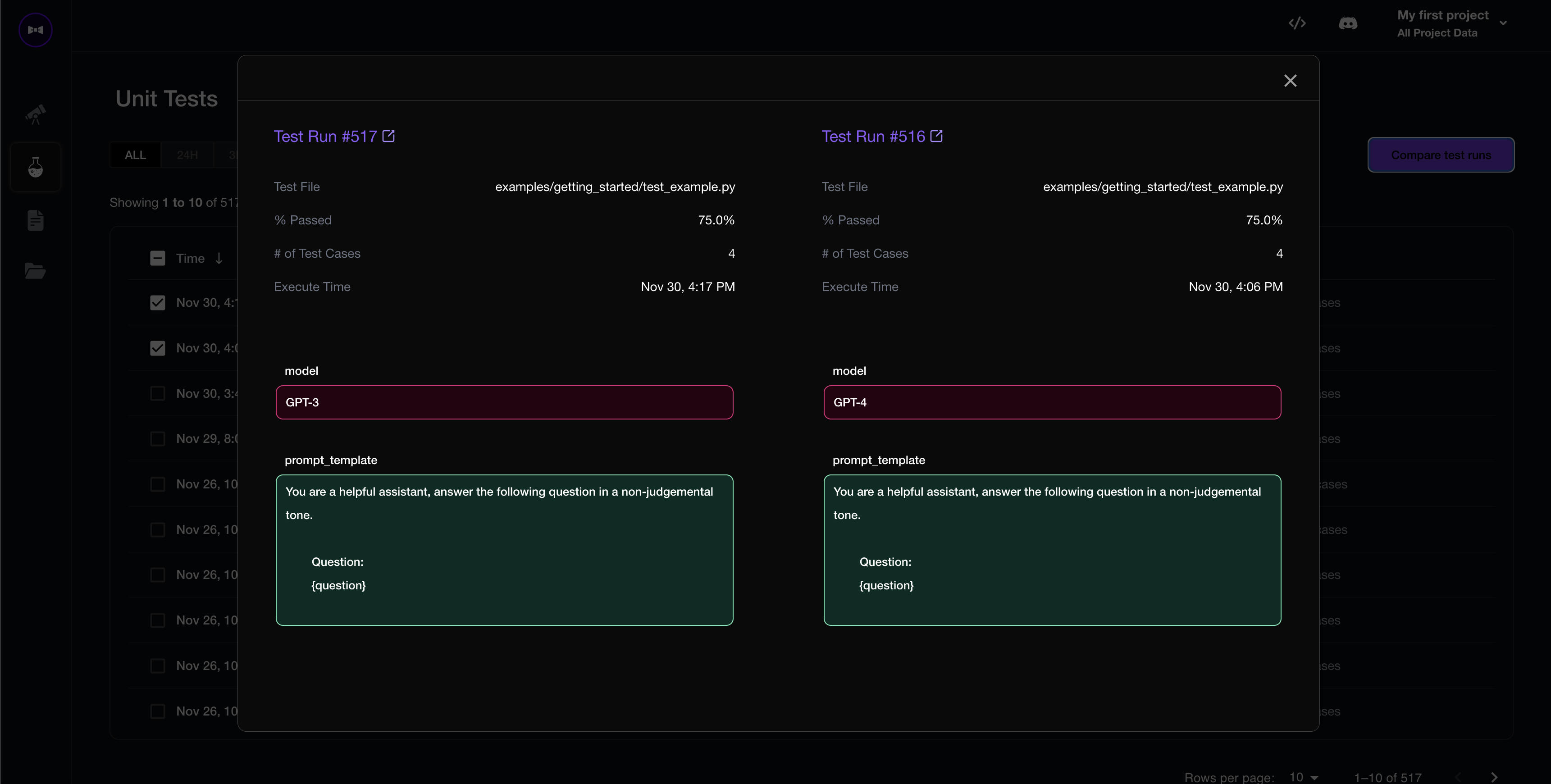

Compare Hyperparameters

To log hyperparameters (such as prompt templates used) for your LLM application, paste in the following code in test_example.py:

import deepeval

...

@deepeval.set_hyperparameters

def hyperparameters():

return {

"chunk_size": 500,

"temperature": 0,

"model": "GPT-4",

"prompt_template": """You are a helpful assistant, answer the following question in a non-judgemental tone.

Question:

{question}

""",

}

Execute deepeval test run test_example.py again to start comparing hyperparmeters for each test run.

Full Example

You can find the full example here on our Github.