Evals in Production

Quick Summary

deepeval allows you to track events in production to enable real-time evaluation on responses. By tracking events, you can leverage our hosted evaluation infrastructure to identify unsatisfactory responses and improve your evaluation dataset over time on Confident.

Setup Tracking

Simply add deepeval.track(...) in your application to start tracking events. The track() function takes in the following arguments:

event_name: typestrspecifying the event trackedmodel: typestrspecifying the name of the LLM model usedinput: typestroutput: typestr- [Optional]

distinct_id: typestrto identify different users using your LLM application - [Optional]

conversation_id: typestrto group together multiple messages under a single conversation thread - [Optional]

completion_time: typefloatthat indicates how many seconds it took your LLM application to complete - [Optional]

retrieval_context: typelist[str]that indicates the context that were retrieved in your RAG pipeline - [Optional]

token_usage: typefloat - [Optional]

token_cost: typefloat - [Optional]

additional_data: typedict - [Optional]

fail_silently: typebool, defaults to True. You should try setting this toFalseif your events are not logging properly. - [Optional]

run_on_background_thread: typebool, defaults to True. You should try setting this toFalseif your events are not logging properly.

note

Please do NOT provide placeholder values for optional parameters. Leave it blank instead.

import deepeval

...

# At the end of your LLM call

deepeval.track(

event_name="Chatbot",

model="gpt-4",

input="input",

output="output",

distinct_id="a user Id",

conversation_id="a conversation thread Id",

retrieval_context=["..."]

completion_time=8.23,

token_usage=134,

token_cost=0.23,

additional_data={"example": "example"},

fail_silently=True

run_on_background_thread=True

)

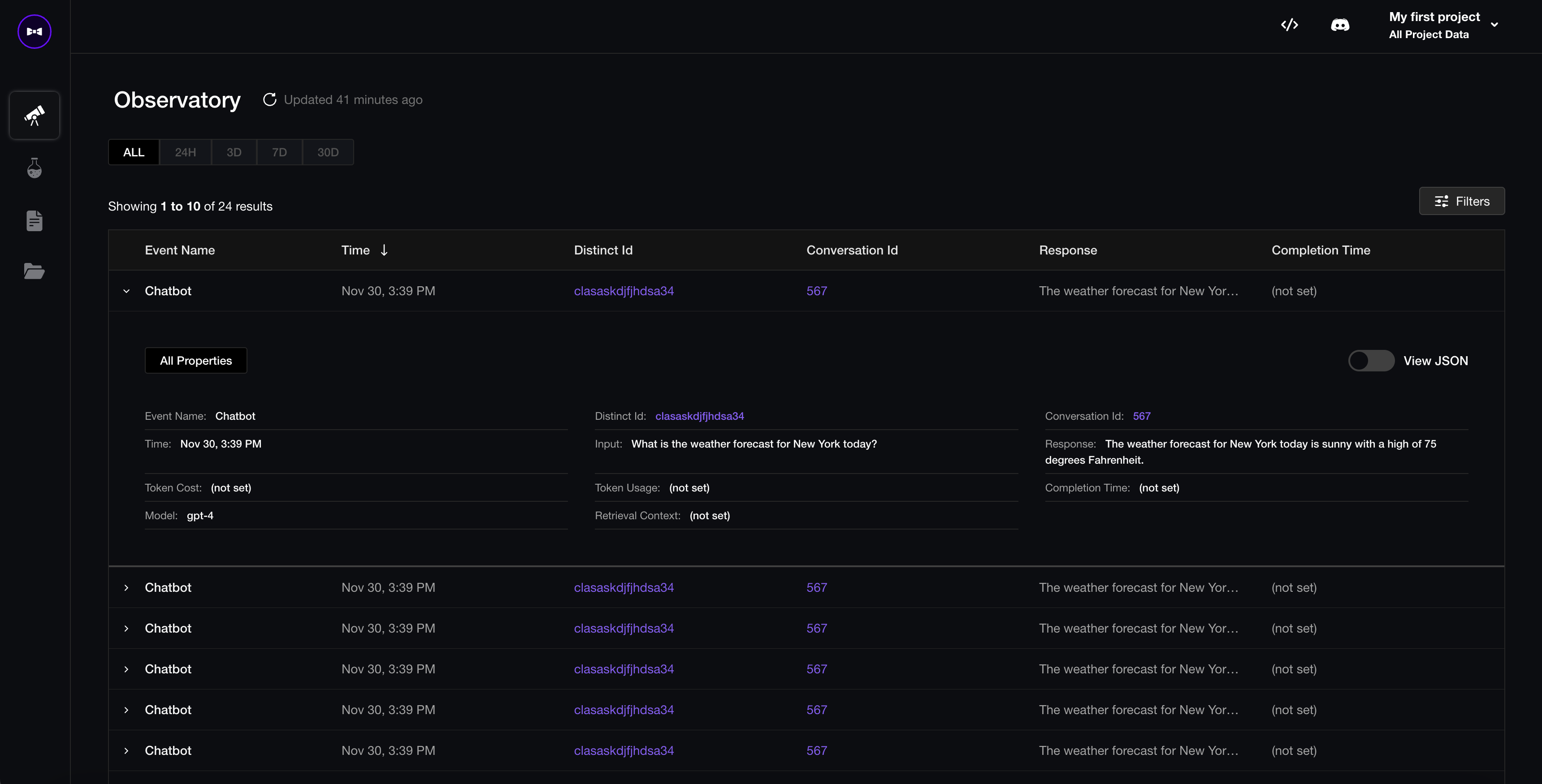

View Events on Confident AI

Lastly, go to Confident's observatory to view events and identify ones where you want to augment your evaluation dataset with.